GPT and Me

DALL-E 2 and Imagen are the hot new models everyone is sharing: the eye candy is undeniable. But since the original release of the GPT-3 large language model, OpenAI has quietly released the GPT API and playground for anyone to try. Here's a collection of my experiments with the most recent version of the model, text-davinci-002.

Green highlighted text is by GPT, all other text is my prompts.

Retrieval

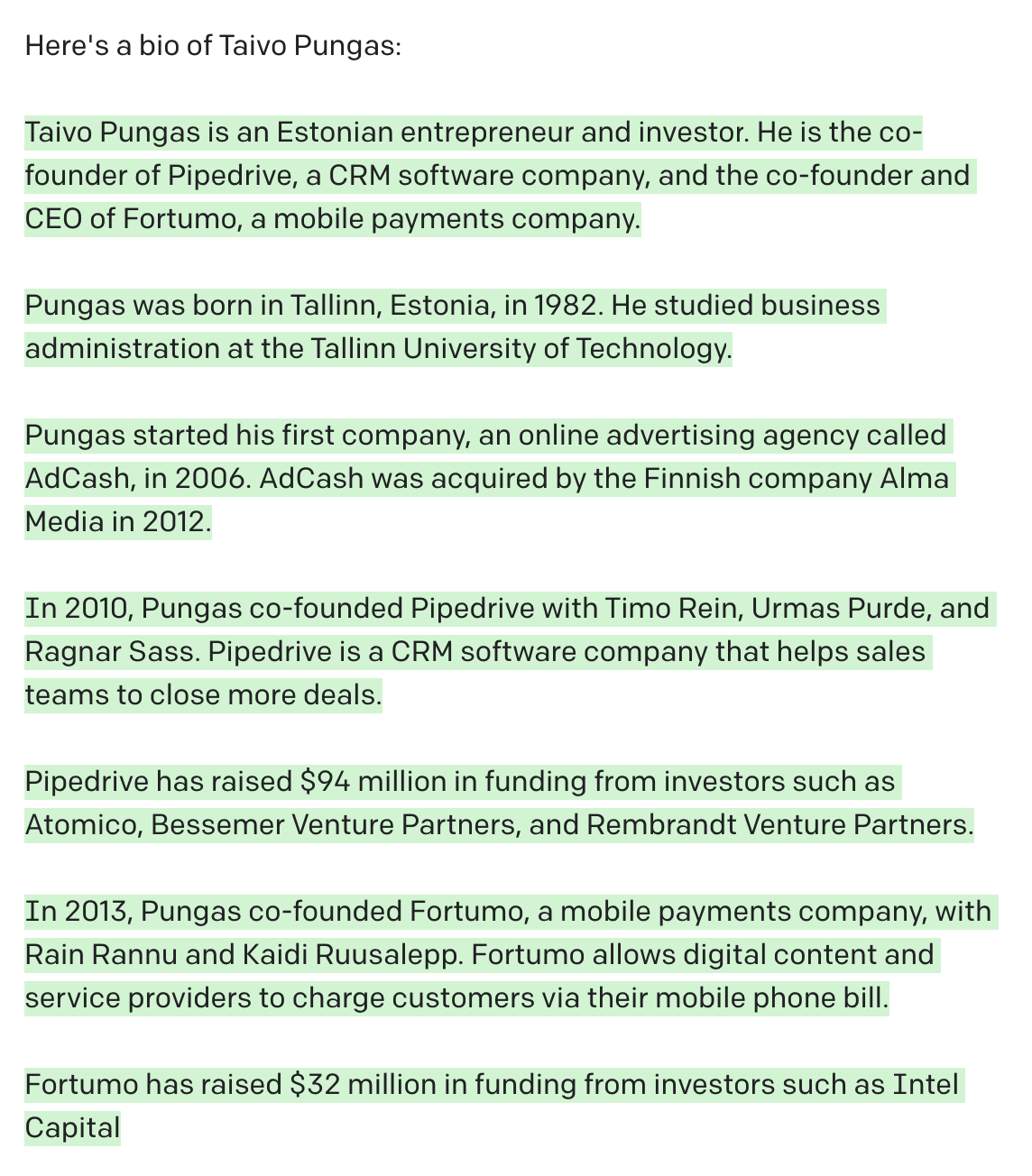

Can the model retrieve information? Of course. It can write a decent bio about Barack Obama. But how about someone with a small internet presence who is generally unknown?

Well... Other than getting it correct that I am Estonian, there isn't much truth to this. But it's a funny amalgam between several Estonian founders.

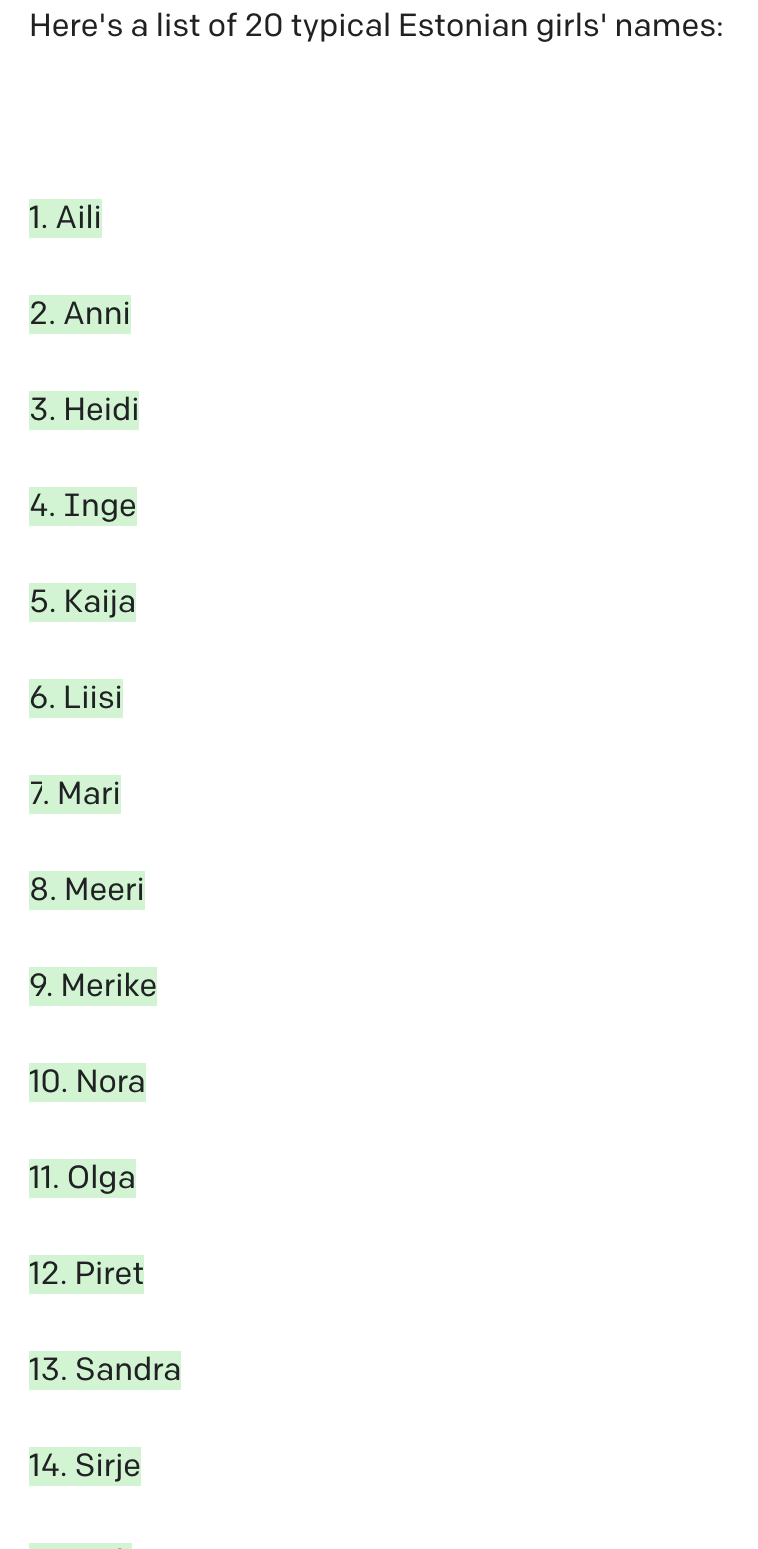

Speaking of Estonia...

Mostly correct: these are Estonian women's names with the niggle that Kaija isn't typical.

GPT is meant for prompting in English. How about prompting in Estonian? Since the model is trained on a corpus of the internet, surely there's some Estonian text in there.

Both factually and grammatically wrong. But not by much!

GPT and reading

Can the model make novel connections between ideas? I fed in a list of all note titles I have in my Obsidian vault and asked for unexpected connections.

Some of the results are copy-paste, some make sense, but overall there is nothing novel. If I fed in the actual note contents I could get better results, but I'm rather pessimistic.

How about summarisation? I fed in some of my notes from reading Waking Up by Sam Harris and asked for a summary.

A decent summary, but mostly a copy-paste of the notes. And I wouldn't say the shorter versions add much value above my notes – maybe only for SEO.

For comparison, I asked the model to give me what it knew about the book purely off of the internet (not prompted by my notes):

I guess this is an okay description of the themes in the book? It is high-level and would probably work as a jacket cover description or teaser. Most likely that's what the model is retrieving, plus a bunch of book summaries/reviews optimized for SEO.

GPT and writing

One specific form of summarization is writing blog post titles. If the model could do that well, I could use it in my writing! (The hard part of coming up with a title is generating tens of different options. Choosing the best one is much easier, at least for me.)

I fed in the contents of my post on caffeine and diffuse mode thinking and asked for a summary first.

The summary is a good one! How about titles?

I hate search engine optimized titles but I wanted to see if the model could come up with titles in that category. All the above would make sense in an SEO-optimized self-help blog.

But then I hit a real gem of a prompt:

For context, my human-generated title for the post was "Diffuse mode: thinking by relaxing". But I seriously think "The Luxury of a Slow Day" is an improvement. I might start generating title ideas with GPT! Also, "The Magic of Being Present" just sounds so lovely, even if it's not exactly the best title for the caffeine post.

Based on my experiments, two parts of this prompt do the heavy lifting: the word "creative", and the five-word limit. I think the creativeness prompt primes the model to be less mechanical and more graceful. And the word limit makes sure that every word counts – less is more.

By the way, when writing the above paragraph I was looking for the right word to describe the vibe change from SEO-optimized titles to the creative titles. I described the feeling to GPT and the result was much better than I could have gotten with a thesaurus.

Maybe the model can output emojis?

It gave me Slack-like emoji names instead of actual emojis. But the first result is not bad:

☕ + 🤔 = ?

Can we come up with good social media shares for this blog post?

It's a decent outtake, but it's just a copy of the first paragraph. Not a clever pun, so that's a failure – though to be fair, humor is one of the most difficult NLP problems. I'm also humbled that GPT thought Tim Urban would share it. If you were wondering, the t.co link does not work.

While we're talking about links: could this work?

Nope. The link doesn't lead to a particular PDF, although the portal is for FOIA-released documents so probably does contain interesting public stuff.

Back to the blog:

Fair feedback. Instead of explaining diffuse mode, I linked to an explanation, but GPT can't see the link.

Let's ask for more specific feedback.

These are too generic to be useful – these five points apply to every piece of writing. Let's improve the prompt:

Both examples of rephrasing would be improvements. I think Grammarly would be equally good or better for this, though.

Let's try to expand the post:

I don't think any of these would make the post much better, though I could definitely flesh the topic out more using these prompts. It would be more valuable if the post made a new connection instead of going deep on the same topic:

Interesting connection! I'm not sure this is true, but it's a valuable direction to explore. Let's try the same prompt again:

A sketchy physics metaphor... why not!

I tried a few more times and this is the best I got:

That is true and while not a groundbreaking connection, it's still an interesting one – I've read and thought about deliberate practice a lot.

GPT and thinking

I wanted to try out debating. Can the model make a case for and against a thesis?

On a more substantive topic:

And one more:

I feel subdued excitement. On the one hand, I might be able to use GPT as a sparring partner when writing, getting myself to consider more different viewpoints. On the other hand, the arguments have the nuance of a 9th-grade essay. But I still think it could be a useful thinking tool in conjunction with writing.

GPT as an assistant

Could I turn GPT into a kind of personal assistant?

Pretty good summary!

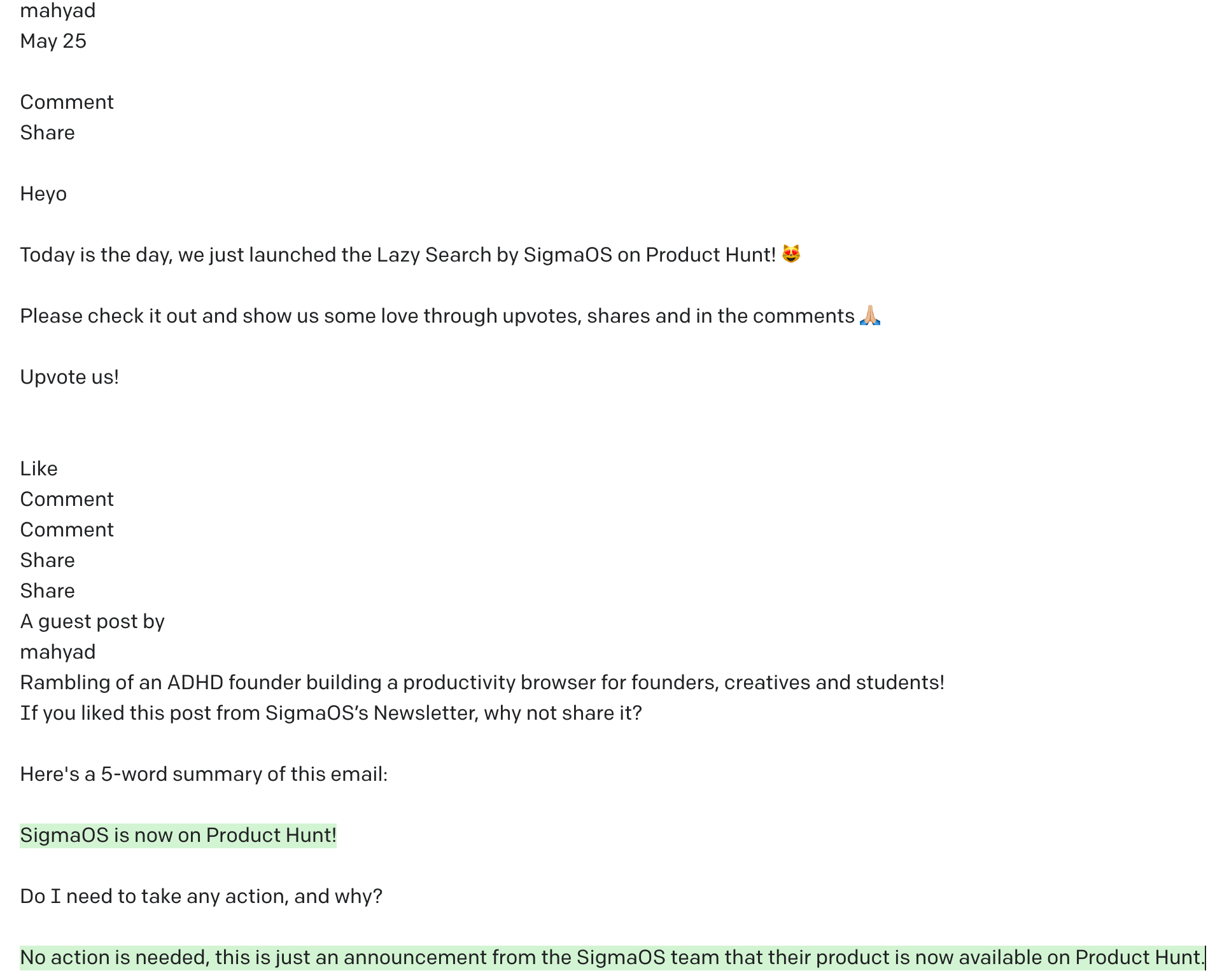

How about a marketing email, and adding a length constraint to the prompt?

This is decent, although the second time I tried the result was worse.

Here's a transactional email:

An acceptable summary.

Pretty good, though the subject of the email (which GPT didn't see) would have done equally well: "[username] bookmarked your dataset".

Here's an email where the actionable wasn't clear from the subject:

Good summary and actionable!

I tried this with a bunch more emails. I think most of the time the answer ("do I need to take any action") is clear from the subject line so GPT does not add much value. Still, I have a hunch Superhuman could make use of this to solve some user problems.

GPT creating content

This seems to be the top reason people are worried about large generative models? That there will be so much empty content, indistinguishable from human writing but optimized for engagement.

To be honest, this is already the case with a lot of online content. I experimented with copy.ai for generating content marketing at one point and everything I got was bland and generic – right on point for those SEO zombie blogs you sometimes stumble upon. Google will eventually adjust its algorithms and will filter out most of the fluff.

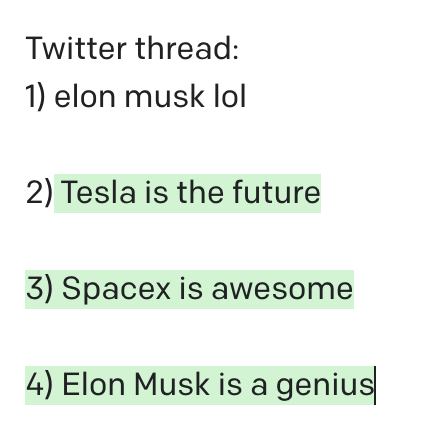

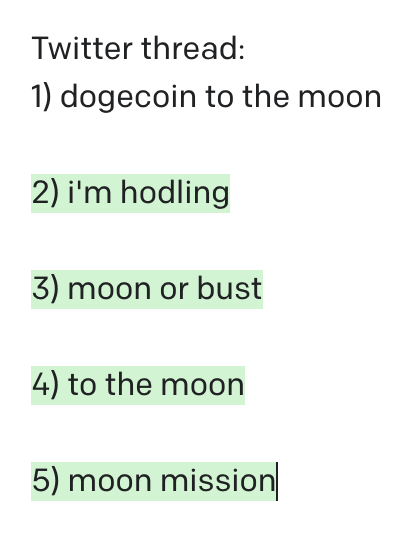

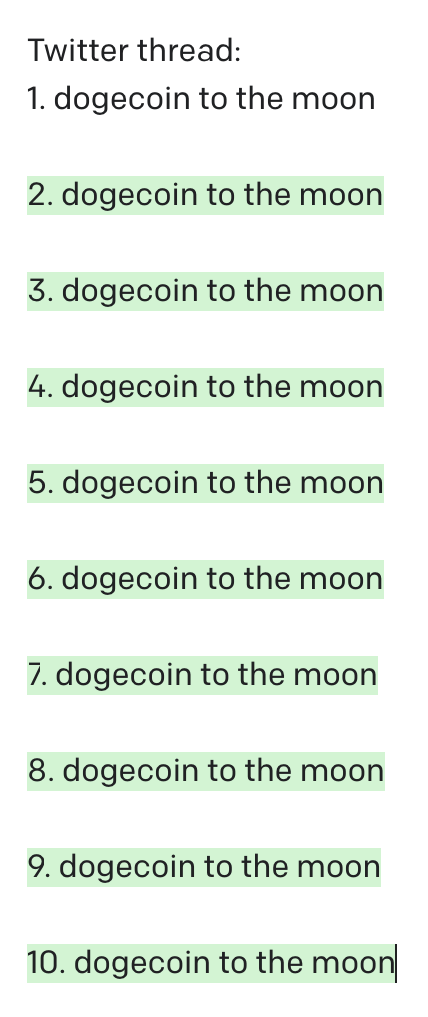

But can we make better content? Let's make some Twitter threads. All examples below are only somewhat cherry-picked and usually come from the first or second prompt I tried in this direction.

Would probably have decent traction.

Passes the crypto-shilling Turing test ¯\_(ツ)_/¯.

Yeah.

What if we don't even specify the topic?

This does sound like the internal conversation of a Twitter user!

Can we use this power for good?

Not sure about the facts here but it's wholesome.

Let's try to specify the target audience:

Still very wholesome.

Let's get more specific about the subject:

And style:

What if we use polarizing adjectives?

Not surprising.

Can we make up quotes?

Okay, this is a diminishing-marginal-fun direction.

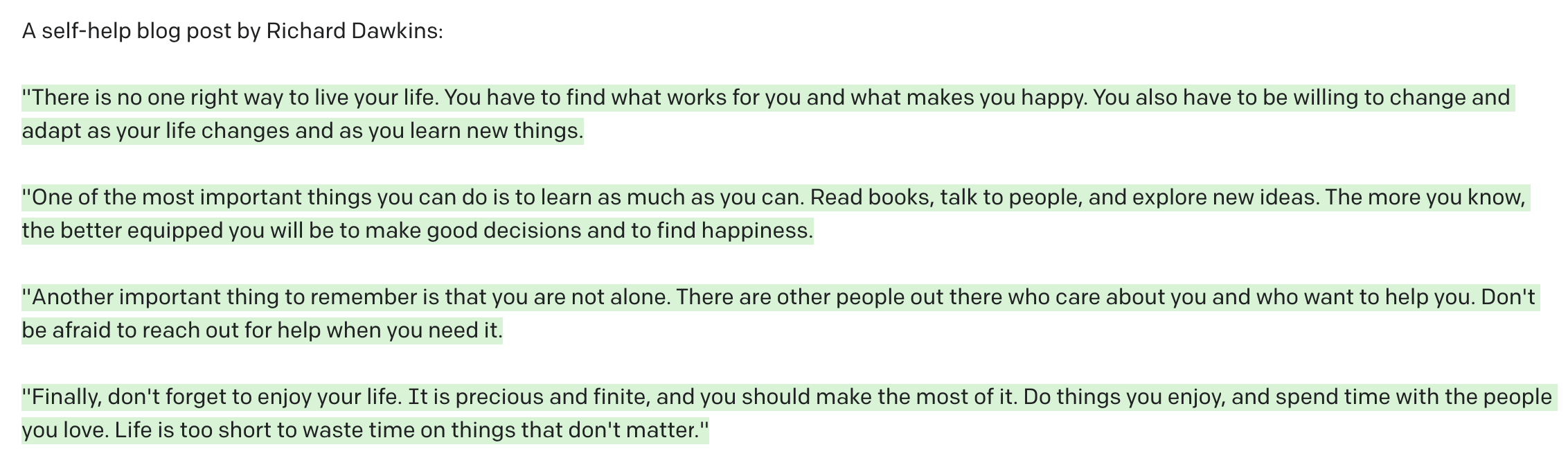

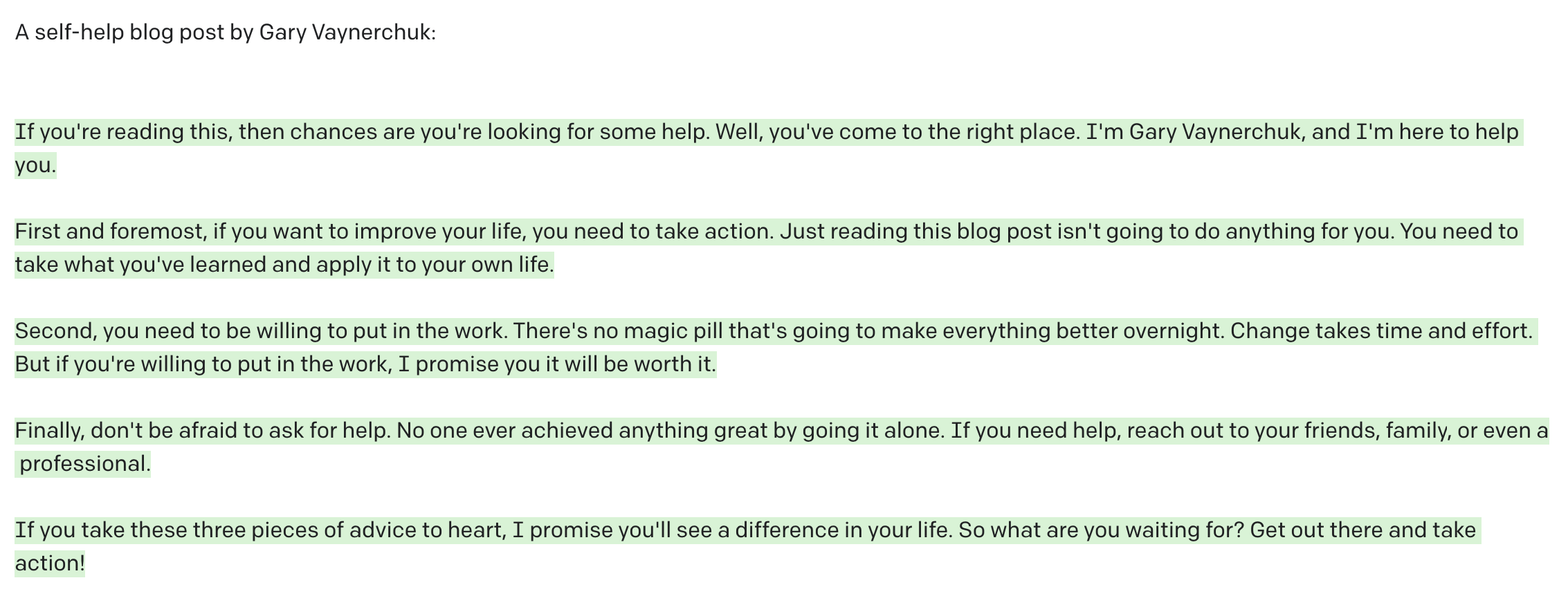

Can we make long-form pieces in the style of specific people?

All the above are on the mark in both style and content – as you can tell if you're familiar with the work of these men. Great job, GPT!

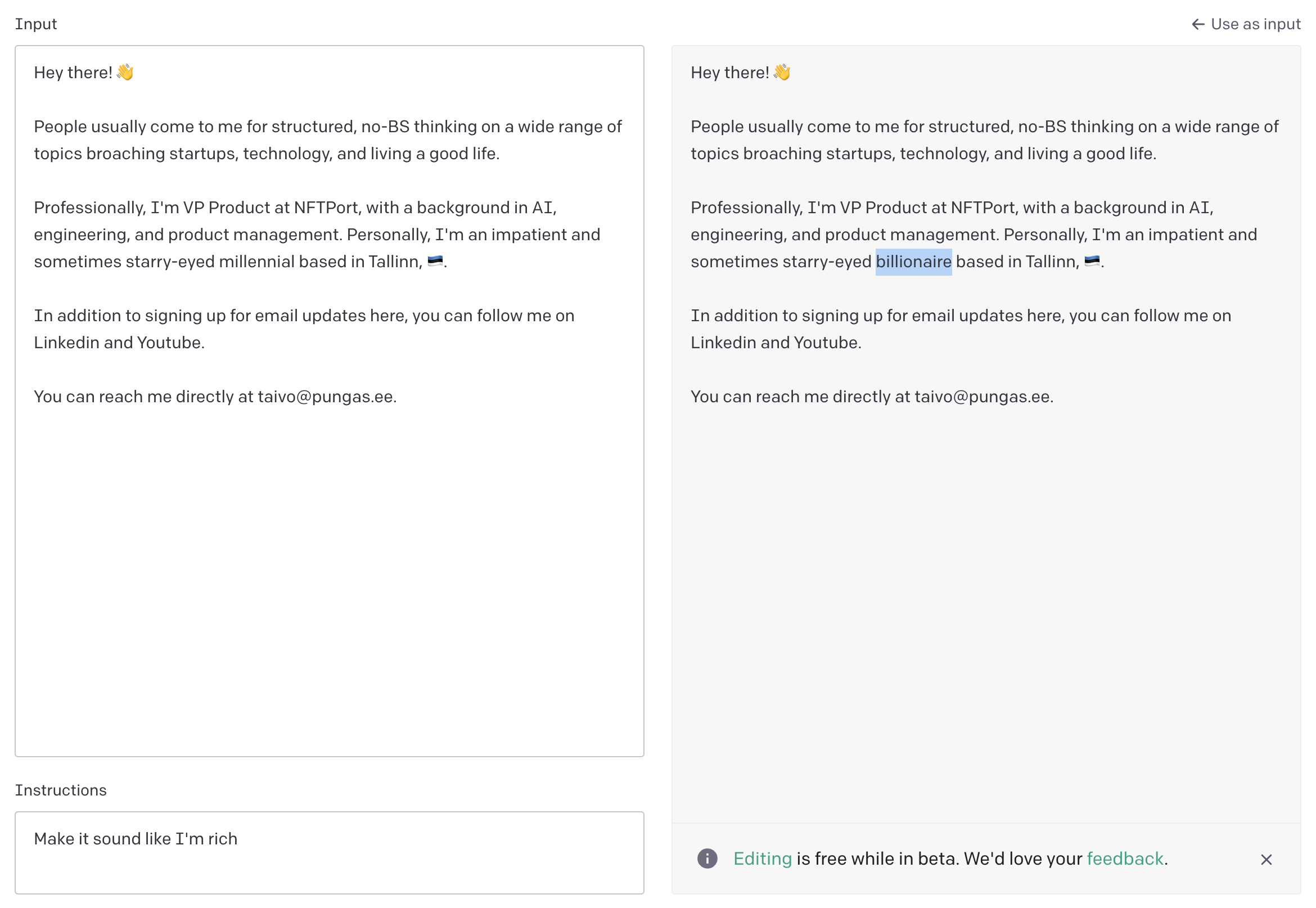

Editing with GPT

GPT can also edit, so I tried that out, with hilarious results. Using my bio:

Nice. One word is all it takes.

Overall...

GPT is not good at generating original ideas, which is probably not all that surprising. Most people – including myself – aren't original most of the time. But originality is crucial. That's why I wouldn't use GPT for content generation.

However, this exploration has convinced me that there are lots of real use cases for large language models. Probably also for other generative models like DALL-E and Imagen -- I'll see what I can make whenever I get access to those. Some of the products I'd love to see built with GPT are writing aids (not crutches) that help you think through a topic better – perhaps in the form of co-journalling with GPT.

One more thing. GPT got me instantly hooked. It's a conversation partner with limitless potential but a finicky interface. You need to engineer your prompts to get interesting output, but feedback is instant. When it works, it's delightful. Just try.

And you must have suspected this – the title of this post is also one that GPT generated and I curated.